Building a CloudFront Cookie Dashboard

Using AWS Lambda@Edge, Kinesis, & QuickSight.

Every large consumer-facing #rails app I have worked on has on and off again spikes of cookie overflow issues. It is made infinitely more complex with multiple front end apps. I will be digging into this more over the next couple days.

— Dan Mayer (@danmayer) August 13, 2019

Dan's tweet several weeks ago reminded me of similar issues at Custom Ink. Having numerous Rails applications compose our frontend, a love of A/B testing, and history with Cookie Session overflow errors - every point in his thread hit close to home.

Today however, Memcached backs most of our sessions, but we still have lots of A/B testing cookies on our site and I was super curious to see if our users could be hitting CloudFront's limits. Which limits you might ask? Headers! Combined, including the Cookie, must not be greater than ~10K for a Classic Load Balancer origin or ~20K for an Application Load Balancer origin. If over, you will see an error like one of the following.

494 ERROR

The request could not be satisfied.

------------------------------------------------------------------

Bad request.

------------------------------------------------------------------

Generated by cloudfront (CloudFront)

Request ID: tm06OdBjtr9g9Tlc8ml5Kpx6qU3klEYyZ4l-V0qy5NVsCZfDC0uQ9g

400 Bad Request

Request Header Or Cookie Too Large

Our Goal

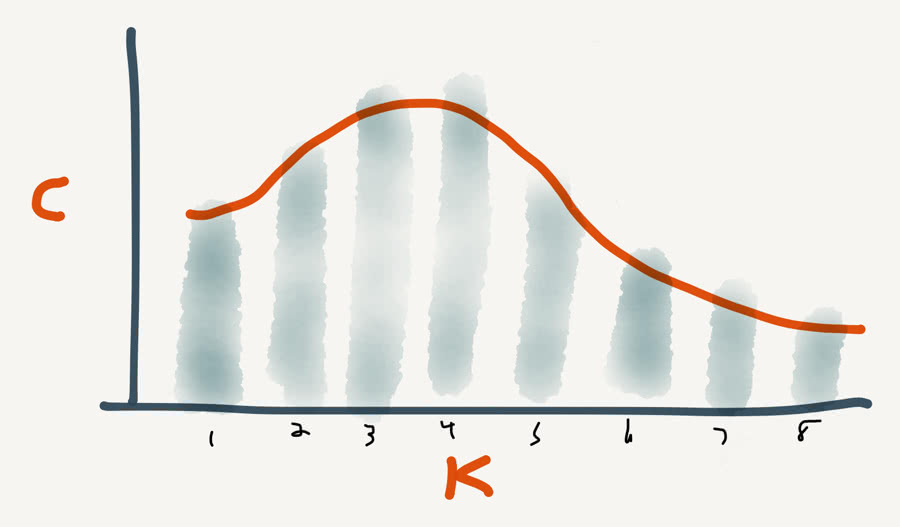

We need a simple data visualization into our site's cookie usage. The solution should start simple and expand from there. I drafted this histogram of 1K intervals and the frequency by Count of page views that I thought would give us a good starting point.

Ideally our method for collecting these insights could be expanded later. For example cookie names and relative sizes might be interesting. Also, doing time-series analysis and pushing that data in real-time for a business intelligence dashboard would be beyond ya yeet. But we have a lot of decisions to make first.

Methodology

The data we need could reside in many different sources. From Apache/NGINX errors logs (kindda) to instrumentation within your Rails application. Our decisions on how to collect this cookie data were determined by a few factors:

- We have CloudFront logging (w/cookies) enabled.

- We were uncertain that logs would catch header overflows.

- We wanted a solution that was easy to turn on and off.

- Lambda@Edge was new to us and we needed some familiarity.

For this and a few other reasons, we settled on a solution pulled almost verbatim from this Global Data Ingestion with Amazon CloudFront and Lambda@Edge AWS blog post. With a few implementation differences and architecture to support our needs:

- Supporting infrastructure will be setup in one AWS Region. Ex:

us-east-1 - The Lambda's code deployed to the edge will no-op outside this region.

- A new CloudFront Behavior with a specific

myanalytics/cookiespath which we will attach our Lambda to & collect data.

This means our primary tools for collecting and reporting on this data will be:

- Lambda@Edge - Measure, Format, & Publish Data

- Kinesis Data Streams - Capture Metrics from Lambda

- QuickSight - Visualize & Share Metrics

During this process we learned that both Lambda@Edge and CloudFront cookie logging will capture data up to around 20K in size. This is well beyond the 10K error threshold when CloudFront shows a "494 ERROR" page. Meaning, this solution has a lot of headroom past the failure states we are interested in measuring using a Classic Load Balancer origin. So let's starting building!

Lambda Function

So the first thing we will need is an AWS Lambda function that we can deploy to a CloudFront distribution. Custom Ink makes use of AWS SAM to develop and deploy our Lambda functions. All code below is an approximation of our code and should work outright or get you started.

First, the index.js JavaScript file. This was written for nodejs10.x which is in the list of runtimes deployable to the edge.

const AWS = require('aws-sdk');

const firehose = new AWS.Firehose();

const RESPONSE = {

body: '',

status: '200',

statusDescription: 'OK',

headers: {

'cache-control': [{ key: 'Cache-Control', value: 'max-age=0, private, must-revalidate' }],

'content-type': [{ key: 'Content-Type', value: 'text/html' }],

'content-encoding': [{ key: 'Content-Encoding', value: 'UTF-8' }]

}

};

exports.handler = async function(event, context) {

if (process.env.AWS_REGION !== 'us-east-1') { return; }

let cookies = '';

const headers = event.Records[0].cf.request.headers;

if (headers.cookie && headers.cookie[0].value) {

cookies = headers.cookie[0].value;

}

const name = 'cloudfront-cookies-lambda-stream';

const size = Math.round(cookies.length / 1000.0);

const time = (new Date()).toISOString();

const data = JSON.stringify({ size: size, timestamp: time }) + '\n';

const params = { DeliveryStreamName: name, Record: { Data: data } };

try {

await firehose.putRecord(params).promise();

} catch (e) {

console.log(e);

}

return RESPONSE;

};

The code grabs the raw cookie header and measures its length rounded to the nearest 1000 bytes. A JSON payload of that cookie size is pushed to a Kinesis data stream with a timestamp. Finally, the Lambda function returns an empty HTML response avoiding the need to send this request to your origin.

With our Lambda code done, we need a SAM CloudFormation template to create the additional resources to get our data into S3. Here is our template.yaml file. ⚠️ Do customize the BucketName since these have to be unique.

AWSTemplateFormatVersion: '2010-09-09'

Transform: AWS::Serverless-2016-10-31

Description: CloudfrontCookiesLambda

Resources:

CloudfrontCookiesLambda:

Type: AWS::Serverless::Function

Properties:

FunctionName: cloudfront-cookies-lambda

CodeUri: .

Handler: index.handler

Runtime: nodejs10.x

Timeout: 5

MemorySize: 256

Role: !GetAtt LambdaEdgeFunctionRole.Arn

LambdaEdgeFunctionRole:

Type: AWS::IAM::Role

DependsOn: FirehoseStream

Properties:

Path: /

ManagedPolicyArns:

- arn:aws:iam::aws:policy/service-role/AWSLambdaBasicExecutionRole

AssumeRolePolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: Allow

Action:

- sts:AssumeRole

Principal:

Service:

- lambda.amazonaws.com

- edgelambda.amazonaws.com

Policies:

- PolicyName: cloudfront-cookies-lambda-policy

PolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: Allow

Action:

- firehose:PutRecord

Resource:

- !Sub arn:aws:firehose:${AWS::Region}:${AWS::AccountId}:deliverystream/cloudfront-cookies-lambda-stream

FirehoseSourceDataBucket:

Type: AWS::S3::Bucket

Properties:

BucketName: !Sub cloudfront-cookies-lambda-bucket

FirehoseStream:

Type: AWS::KinesisFirehose::DeliveryStream

DependsOn: FirehoseRole

Properties:

DeliveryStreamName: cloudfront-cookies-lambda-stream

ExtendedS3DestinationConfiguration:

BucketARN: !Sub arn:aws:s3:::${FirehoseSourceDataBucket}

BufferingHints:

IntervalInSeconds: '60'

SizeInMBs: '5'

CompressionFormat: UNCOMPRESSED

Prefix: firehose

RoleARN: !GetAtt FirehoseRole.Arn

FirehoseRole:

Type: AWS::IAM::Role

DependsOn: FirehoseSourceDataBucket

Properties:

AssumeRolePolicyDocument:

Version: '2012-10-17'

Statement:

- Sid: ''

Effect: Allow

Principal:

Service: firehose.amazonaws.com

Action: 'sts:AssumeRole'

Condition:

StringEquals:

'sts:ExternalId': !Ref 'AWS::AccountId'

Path: '/'

Policies:

- PolicyName: cloudfront-cookies-lambda-firehose-policy

PolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: Allow

Action:

- s3:*

Resource:

- !Sub arn:aws:s3:::${FirehoseSourceDataBucket}

- !Sub arn:aws:s3:::${FirehoseSourceDataBucket}/*

This creates an S3 bucket for our data to land into. We create a AWS::KinesisFirehose::DeliveryStream and supporting Role which has full permissions to that S3 bucket. Lastly, we create the Lambda and supporting Execution Role which allows our Lambda to firehose:PutRecord to our newly created Kinesis Data Firehose Delivery Stream. Once you have packaged & deployed this Lambda we are ready to get everything else setup.

CloudFront Behavior

Now starts the ClickOps within the AWS Console. Navigate to your CloudFront distribution so we can create a new Behavior.

- Behaviors -> Create Behavior

- Path Pattern:

myanalytics/cookies - Object Caching:

Use Origin Cache Headers - Forward Cookies:

All - Query String Forwarding and Caching:

Forward all, cache based on all

Taking this approach means we can avoid potentially breaking our site with a Lambda@Edge in an error state. Doing so would be disastrous since it could take up to 40 minutes to redeploy fixes to all edge locations.

Deploy To Lambda@Edge

Now to deploy our Lambda to "The Edge" on the new myanalytics/cookies Behavior created above. Navigate to Lambda in the AWS Console and select our cookie Lambda.

- Actions -> Deploy to Lambda@Edge

- Distribution:

SELECT YOUR DISTRO - Cache behavior:

myanalytics/cookies(IMPORTANT) - CloudFront event:

Origin request - Include body:

DO NOT CHECK - Confirm & Deploy

Providing Data

Our myanalytics/cookies endpoint now needs data and asynchronous JavaScript is great candidate to achieve this. Here is a small snippet that you can attach to your site or deploy via something like Google Tag Manager which also makes it very easy to turn this data stream off/on.

(function() {

var cookieURL = '/myanalytics/cookies';

var request = new XMLHttpRequest();

request.open('GET', cookieURL, true);

request.onload = function() {};

request.onerror = function() {};

request.send();

})();

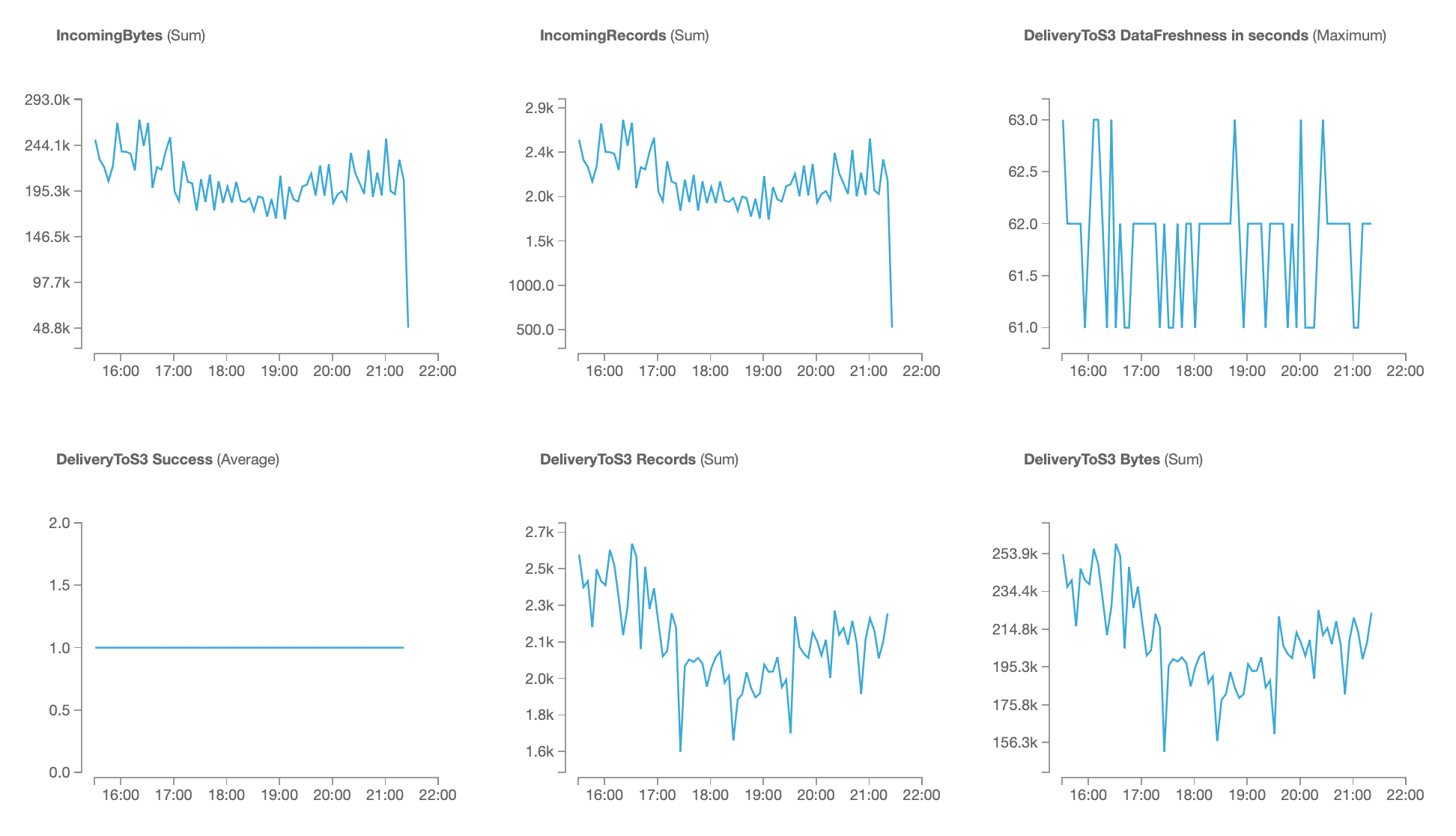

You can verify data is flowing in by visiting the Monitoring tab within your Data Firehouse Delivery Stream. You should see something like this.

Analytics with QuickSight

Finally, and assuming everything else is working, what good is data if we can not visualize it? If you are new to QuickSight, here are some fun links to get you started.

Assuming you have a QuickSight account setup in your AWS Organization, we need to do a few things to ensure QuickSight has access to our CloudFront cookie S3 bucket, called our data source.

S3 Permissions

From the QuickSight main page. Manage QuickSight -> Security & permission -> QuickSight access to AWS services -> Add or remove

If Amazon S3 is not clicked, select it. If it is, click "Details" then "Select S3 buckets" and finally select your CloudFront cookie bucket. This will allow QuickSight to access the data.

New Data Set

From the QuickSight main page. Manage Data -> New data set -> S3 and enter the following information.

- Data source name:

CloudFront Cookies - Upload a manifest file:

(see below, replace S3 bucket name)

{

"fileLocations": [

{

"URIPrefixes": [

"s3://cloudfront-cookies-lambda-bucket/firehose2019/10/03"

]

}

],

"globalUploadSettings": {

"format": "JSON",

"containsHeader": false,

"delimiter": "\n",

"textqualifier": "'"

}

}

So what is happening here? The URIPrefixes allows us to point QuickSight to our S3 bucket. The prefix/path is up to you. Kinesis will automatically create directory style paths for the dates. For example, we could measure all October in 2019 data by using the path /firehose2019/10. QuickSight allows you to refresh data sources and change this later on.

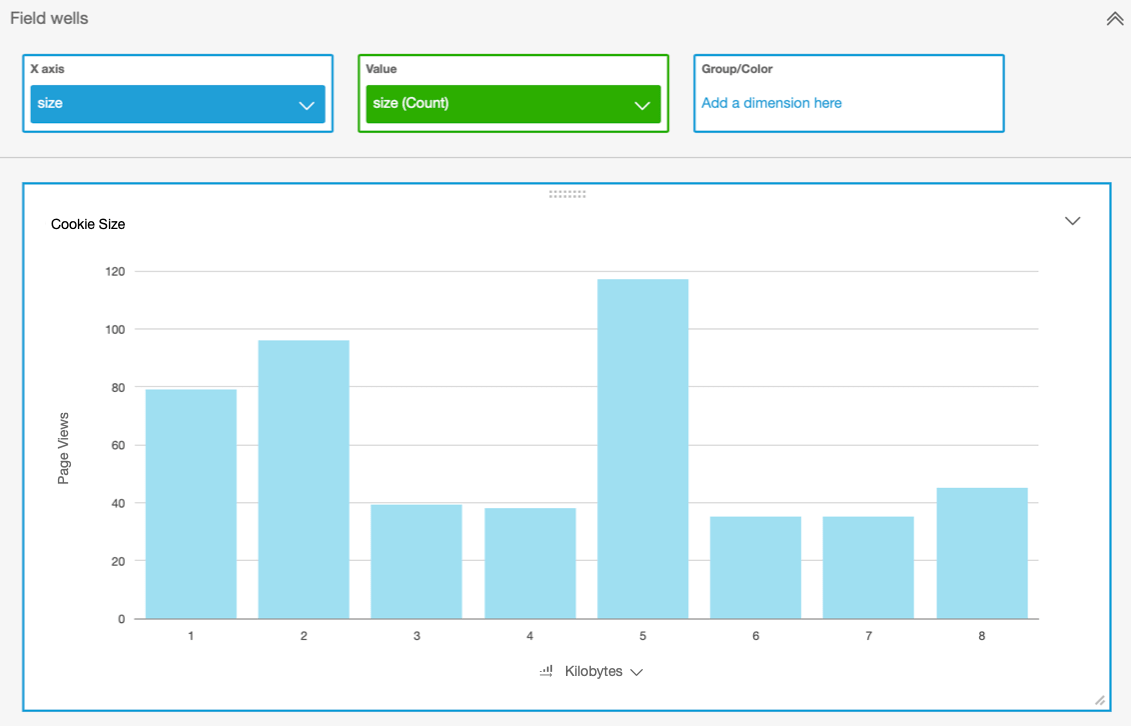

New Analysis

Finally, we get to see our data! From the QuickSight main page, click on "New analysis" then choose your "CloudFront Cookies" data source. Select the "Vertical Bar Chart" visual type and basically make a simple size over size analysis like this. Congrats, you can now track your CloudFront cookie sizes!

Resources

I hope you enjoyed learning a bit about Lambda@Edge and using Kinesis with QuickSight in this post. From here you should be able to do all sorts of cool things. Hope these articles below give you some good ideas and we would love to hear from you if you like this post or had other ideas. Thanks!

- Lambda@Edge Design Best Practices

- Analyze a Time Series in Real Time with AWS Lambda, Amazon Kinesis and Amazon DynamoDB Streams

- How to perform advanced analytics and build visualizations of your Amazon DynamoDB data by using Amazon Athena

- Amazon Kinesis Data Analytics for SQL Applications: How It Works

- Analyze your Amazon CloudFront access logs at scale

- How Requests Are Logged When the Request URL or Headers Exceed Size Limits

- TrueCar’s Dynamic Routing with AWS Lambda@Edge

- Aggregating Lambda@Edge Logs

- Building a Serverless Subscription Service using Lambda@Edge